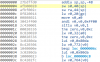

peh@vidar:~> diff -u /home/FritzBox/FB7590/GPL/linux/net/ipv4/ip_forward.c /home/FritzBox/FB7490/firmware/GPL_07.01/linux-3.10/net/ipv4/ip_forward.c

--- /home/FritzBox/FB7590/GPL/linux/net/ipv4/ip_forward.c 2018-08-30 14:14:19.000000000 +0200

+++ /home/FritzBox/FB7490/firmware/GPL_07.01/linux-3.10/net/ipv4/ip_forward.c 2018-07-26 14:01:40.000000000 +0200

@@ -38,10 +38,6 @@

#include <linux/route.h>

#include <net/route.h>

#include <net/xfrm.h>

-

-#ifdef CONFIG_AVM_PA

-#include <linux/avm_pa.h>

-#endif

static bool ip_may_fragment(const struct sk_buff *skb)

{

@@ -108,7 +104,7 @@

return ret;

}

-static int __ipt_optimized ip_forward_finish(struct sk_buff *skb)

+static int ip_forward_finish(struct sk_buff *skb)

{

struct ip_options *opt = &(IPCB(skb)->opt);

@@ -118,15 +114,17 @@

if (unlikely(opt->optlen))

ip_forward_options(skb);

- if (ip_gso_exceeds_dst_mtu(skb))

- return ip_forward_finish_gso(skb);

#ifdef CONFIG_AVM_PA

avm_pa_mark_routed(skb);

#endif

+

+ if (ip_gso_exceeds_dst_mtu(skb))

+ return ip_forward_finish_gso(skb);

+

return dst_output(skb);

}

-int __ipt_optimized ip_forward(struct sk_buff *skb)

+int ip_forward(struct sk_buff *skb)

{

struct iphdr *iph; /* Our header */

struct rtable *rt; /* Route we use */

@@ -138,10 +136,9 @@

if (skb_warn_if_lro(skb))

goto drop;

-/*Policy check disabled for IPSec DS MPE accelerated traffic */

-

if (!xfrm4_policy_check(NULL, XFRM_POLICY_FWD, skb))

goto drop;

+

if (IPCB(skb)->opt.router_alert && ip_call_ra_chain(skb))

return NET_RX_SUCCESS;

@@ -189,9 +186,7 @@

!skb_sec_path(skb))

ip_rt_send_redirect(skb);

-#ifndef CONFIG_LANTIQ_IPQOS

skb->priority = rt_tos2priority(iph->tos);

-#endif

return NF_HOOK(NFPROTO_IPV4, NF_INET_FORWARD, skb, skb->dev,

rt->dst.dev, ip_forward_finish);

peh@vidar:~> diff -u /home/FritzBox/FB7590/GPL/linux/net/core/dev.c /home/FritzBox/FB7490/firmware/GPL_07.01/linux-3.10/net/core/dev.c

--- /home/FritzBox/FB7590/GPL/linux/net/core/dev.c 2018-08-30 14:14:19.000000000 +0200

+++ /home/FritzBox/FB7490/firmware/GPL_07.01/linux-3.10/net/core/dev.c 2018-07-26 14:01:40.000000000 +0200

@@ -72,6 +72,13 @@

* - netif_rx() feedback

*/

+/**

+ * Some part of this file is modified by Ikanos Communications.

+ *

+ * Copyright (C) 2013-2014 Ikanos Communications.

+ */

+

+

#include <asm/uaccess.h>

#include <linux/bitops.h>

#include <linux/capability.h>

@@ -97,14 +104,12 @@

#include <net/net_namespace.h>

#include <net/sock.h>

#include <linux/rtnetlink.h>

+#if defined(CONFIG_IMQ) || defined(CONFIG_IMQ_MODULE)

+#include <linux/imq.h>

+#endif

#ifdef CONFIG_AVM_PA

#include <linux/avm_pa.h>

#endif

-#ifdef CONFIG_AVM_SIMPLE_PROFILING

-#include <linux/avm_profile.h>

-#else

-#define avm_simple_profiling_skb(a,b) do { } while(0)

-#endif

#include <linux/stat.h>

#include <net/dst.h>

#include <net/pkt_sched.h>

@@ -137,18 +142,11 @@

#include <linux/inetdevice.h>

#include <linux/cpu_rmap.h>

#include <linux/static_key.h>

-#if defined(CONFIG_IMQ) || defined(CONFIG_IMQ_MODULE)

-#include <linux/imq.h>

-#endif

#include "net-sysfs.h"

-

-#if defined(CONFIG_LTQ_UDP_REDIRECT) || defined(CONFIG_LTQ_UDP_REDIRECT_MODULE)

-#include <net/udp.h>

-#include <linux/udp_redirect.h>

+#ifdef CONFIG_FUSIV_KERNEL_PROFILER_MODULE

+extern int loggerProfile(unsigned long event);

#endif

-

-

/* Instead of increasing this, you should create a hash table. */

#define MAX_GRO_SKBS 8

@@ -160,12 +158,8 @@

struct list_head ptype_base[PTYPE_HASH_SIZE] __read_mostly;

struct list_head ptype_all __read_mostly; /* Taps */

static struct list_head offload_base __read_mostly;

-

-#ifdef CONFIG_LANTIQ_MCAST_HELPER_MODULE

-int (*mcast_helper_sig_check_update_ptr)(struct sk_buff *skb) = NULL;

-EXPORT_SYMBOL(mcast_helper_sig_check_update_ptr);

-#endif

-

+static int (*avm_recvhook)(struct sk_buff *skb);

+static int (*avm_early_recvhook)(struct sk_buff *skb);

/*

* The @dev_base_head list is protected by @dev_base_lock and the rtnl

@@ -191,8 +185,6 @@

seqcount_t devnet_rename_seq;

-struct sk_buff *handle_ing(struct sk_buff *skb, struct packet_type **pt_prev,

- int *ret, struct net_device *orig_dev);

static inline void dev_base_seq_inc(struct net *net)

{

while (++net->dev_base_seq == 0);

@@ -360,10 +352,6 @@

*******************************************************************************/

-#ifdef CONFIG_AVM_RECV_HOOKS

-static int (*avm_recvhook)(struct sk_buff *skb);

-static int (*avm_early_recvhook)(struct sk_buff *skb);

-

void set_avm_recvhook(int (*recvhook)(struct sk_buff *skb))

{

avm_recvhook = recvhook;

@@ -375,7 +363,6 @@

avm_early_recvhook = recvhook;

}

EXPORT_SYMBOL(set_avm_early_recvhook);

-#endif

/*

* Add a protocol ID to the list. Now that the input handler is

@@ -699,8 +686,14 @@

struct net_device *__dev_get_by_name(struct net *net, const char *name)

{

struct net_device *dev;

- struct hlist_head *head = dev_name_hash(net, name);

+ struct hlist_head *head;

+#if defined(CONFIG_IFX_PPA_API) || defined(CONFIG_IFX_PPA_API_MODULE)

+ if (net == NULL) {

+ net = &init_net;

+ }

+#endif

+ head = dev_name_hash(net, name);

hlist_for_each_entry(dev, head, name_hlist)

if (!strncmp(dev->name, name, IFNAMSIZ))

return dev;

@@ -1601,37 +1594,59 @@

static struct static_key netstamp_needed __read_mostly;

#ifdef HAVE_JUMP_LABEL

-/* We are not allowed to call static_key_slow_dec() from irq context

- * If net_disable_timestamp() is called from irq context, defer the

- * static_key_slow_dec() calls.

- */

static atomic_t netstamp_needed_deferred;

+static atomic_t netstamp_wanted;

+static void netstamp_clear(struct work_struct *work)

+{

+ int deferred = atomic_xchg(&netstamp_needed_deferred, 0);

+ int wanted;

+

+ wanted = atomic_add_return(deferred, &netstamp_wanted);

+ if (wanted > 0)

+ static_key_enable(&netstamp_needed);

+ else

+ static_key_disable(&netstamp_needed);

+}

+static DECLARE_WORK(netstamp_work, netstamp_clear);

#endif

void net_enable_timestamp(void)

{

#ifdef HAVE_JUMP_LABEL

- int deferred = atomic_xchg(&netstamp_needed_deferred, 0);

+ int wanted;

- if (deferred) {

- while (--deferred)

- static_key_slow_dec(&netstamp_needed);

- return;

+ while (1) {

+ wanted = atomic_read(&netstamp_wanted);

+ if (wanted <= 0)

+ break;

+ if (atomic_cmpxchg(&netstamp_wanted, wanted, wanted + 1) == wanted)

+ return;

}

-#endif

+ atomic_inc(&netstamp_needed_deferred);

+ schedule_work(&netstamp_work);

+#else

static_key_slow_inc(&netstamp_needed);

+#endif

}

EXPORT_SYMBOL(net_enable_timestamp);

void net_disable_timestamp(void)

{

#ifdef HAVE_JUMP_LABEL

- if (in_interrupt()) {

- atomic_inc(&netstamp_needed_deferred);

- return;

+ int wanted;

+

+ while (1) {

+ wanted = atomic_read(&netstamp_wanted);

+ if (wanted <= 1)

+ break;

+ if (atomic_cmpxchg(&netstamp_wanted, wanted, wanted - 1) == wanted)

+ return;

}

-#endif

+ atomic_dec(&netstamp_needed_deferred);

+ schedule_work(&netstamp_work);

+#else

static_key_slow_dec(&netstamp_needed);

+#endif

}

EXPORT_SYMBOL(net_disable_timestamp);

@@ -1648,8 +1663,7 @@

__net_timestamp(SKB); \

} \

-static inline bool is_skb_forwardable(struct net_device *dev,

- struct sk_buff *skb)

+bool is_skb_forwardable(struct net_device *dev, struct sk_buff *skb)

{

unsigned int len;

@@ -1668,6 +1682,7 @@

return false;

}

+EXPORT_SYMBOL_GPL(is_skb_forwardable);

/**

* dev_forward_skb - loopback an skb to another netif

@@ -2276,7 +2291,7 @@

goto out;

}

- *(__sum16 *)(skb->data + offset) = csum_fold(csum);

+ *(__sum16 *)(skb->data + offset) = csum_fold(csum) ?: CSUM_MANGLED_0;

out_set_summed:

skb->ip_summed = CHECKSUM_NONE;

out:

@@ -2503,9 +2518,9 @@

if (skb->ip_summed != CHECKSUM_NONE &&

!can_checksum_protocol(features, protocol)) {

features &= ~NETIF_F_ALL_CSUM;

- } else if (illegal_highdma(dev, skb)) {

- features &= ~NETIF_F_SG;

}

+ if (illegal_highdma(dev, skb))

+ features &= ~NETIF_F_SG;

return features;

}

@@ -2564,13 +2579,13 @@

int rc = NETDEV_TX_OK;

unsigned int skb_len;

-#ifdef CONFIG_AVM_PA

- (void)avm_pa_dev_snoop_transmit(AVM_PA_DEVINFO(dev), skb);

-#endif

-

if (likely(!skb->next)) {

netdev_features_t features;

+ /* TODO: #if defined(CONFIG_IMQ) || defined(CONFIG_IMQ_MODULE) patch

+ * left out !!!

+ */

+

/*

* If device doesn't need skb->dst, release it right now while

* its hot in this cpu cache

@@ -2624,32 +2639,16 @@

}

}

-#if defined(CONFIG_IMQ) || defined(CONFIG_IMQ_MODULE)

- if (!list_empty(&ptype_all) &&

- !(skb->imq_flags & IMQ_F_ENQUEUE))

-#else

if (!list_empty(&ptype_all))

-#endif

dev_queue_xmit_nit(skb, dev);

-#ifdef CONFIG_ETHERNET_PACKET_MANGLE

- if (!dev->eth_mangle_tx ||

- (skb = dev->eth_mangle_tx(dev, skb)) != NULL)

-#else

- if (1)

+ skb_len = skb->len;

+#ifdef CONFIG_NET_DEBUG_SKBUFF_LEAK

+ skb_track_funccall(skb, ops->ndo_start_xmit);

#endif

- {

- skb_len = skb->len;

- rc = ops->ndo_start_xmit(skb, dev);

- trace_net_dev_xmit(skb, rc, dev, skb_len);

- } else {

- rc = NETDEV_TX_OK;

- }

- #ifdef CONFIG_LTQ_IPQOS_BRIDGE_EBT_IMQ

- if (txq && (rc == NETDEV_TX_OK))

- #else

+ rc = ops->ndo_start_xmit(skb, dev);

+ trace_net_dev_xmit(skb, rc, dev, skb_len);

if (rc == NETDEV_TX_OK)

- #endif

txq_trans_update(txq);

return rc;

}

@@ -2664,19 +2663,9 @@

if (!list_empty(&ptype_all))

dev_queue_xmit_nit(nskb, dev);

-#ifdef CONFIG_ETHERNET_PACKET_MANGLE

- if (!dev->eth_mangle_tx ||

- (nskb = dev->eth_mangle_tx(dev, nskb)) != NULL)

-#else

- if (1)

-#endif

- {

- skb_len = nskb->len;

- rc = ops->ndo_start_xmit(nskb, dev);

- trace_net_dev_xmit(nskb, rc, dev, skb_len);

- } else {

- rc = NETDEV_TX_OK;

- }

+ skb_len = nskb->len;

+ rc = ops->ndo_start_xmit(nskb, dev);

+ trace_net_dev_xmit(nskb, rc, dev, skb_len);

if (unlikely(rc != NETDEV_TX_OK)) {

if (rc & ~NETDEV_TX_MASK)

goto out_kfree_gso_skb;

@@ -2684,9 +2673,6 @@

skb->next = nskb;

return rc;

}

- #ifdef CONFIG_LTQ_IPQOS_BRIDGE_EBT_IMQ

- if (txq)

- #endif

txq_trans_update(txq);

if (unlikely(netif_xmit_stopped(txq) && skb->next))

return NETDEV_TX_BUSY;

@@ -2782,9 +2768,6 @@

rc = NET_XMIT_SUCCESS;

} else {

skb_dst_force(skb);

-#if defined(CONFIG_AVM_PA) && defined(AVM_PA_MARK_SHAPED)

- avm_pa_mark_shaped(skb);

-#endif

rc = q->enqueue(skb, q) & NET_XMIT_MASK;

if (qdisc_run_begin(q)) {

if (unlikely(contended)) {

@@ -2867,41 +2850,32 @@

struct netdev_queue *txq;

struct Qdisc *q;

int rc = -ENOMEM;

- int ret=0;

+

+#ifdef CONFIG_NET_DEBUG_SKBUFF_LEAK

+ skb_track_caller(skb);

+#endif

skb_reset_mac_header(skb);

- avm_simple_profiling_skb(0, skb);

+#ifdef CONFIG_AVM_PA

+ if (vlan_tx_tag_present(skb)) {

+ skb = __vlan_put_tag(skb, skb->vlan_proto,

+ vlan_tx_tag_get(skb));

+ if (unlikely(!skb))

+ return rc;

+

+ skb->vlan_tci = 0;

+ }

+ (void)avm_pa_dev_snoop_transmit(AVM_PA_DEVINFO(dev), skb);

+#endif

/* Disable soft irqs for various locks below. Also

* stops preemption for RCU.

*/

rcu_read_lock_bh();

-#ifdef CONFIG_LANTIQ_MCAST_HELPER_MODULE

- if(mcast_helper_sig_check_update_ptr != NULL)

- {

- ret = mcast_helper_sig_check_update_ptr(skb);

- if (ret == 1)

- {

- //dev_kfree_skb_any(skb);

- //rcu_read_unlock_bh();

- //return rc;

- }

- }

-#endif

-

skb_update_prio(skb);

-#ifdef CONFIG_OFFLOAD_FWD_MARK

- /* Don't forward if offload device already forwarded */

- if (skb->offload_fwd_mark &&

- skb->offload_fwd_mark == dev->offload_fwd_mark) {

- consume_skb(skb);

- rc = NET_XMIT_SUCCESS;

- goto out;

- }

-#endif

txq = netdev_pick_tx(dev, skb);

q = rcu_dereference_bh(txq->qdisc);

@@ -2989,10 +2963,6 @@

__raise_softirq_irqoff(NET_RX_SOFTIRQ);

}

-#if defined(CONFIG_LTQ_PPA_API_SW_FASTPATH)

-extern int32_t (*ppa_hook_sw_fastpath_send_fn)(struct sk_buff *skb);

-#endif

-

#ifdef CONFIG_RPS

/* One global table that all flow-based protocols share. */

@@ -3278,6 +3248,10 @@

{

int ret;

+#ifdef CONFIG_NET_DEBUG_SKBUFF_LEAK

+ skb_track_caller(skb);

+#endif

+

/* if netpoll wants it, pretend we never saw it */

if (netpoll_rx(skb))

return NET_RX_DROP;

@@ -3285,14 +3259,6 @@

net_timestamp_check(netdev_tstamp_prequeue, skb);

trace_netif_rx(skb);

-

-#if defined(CONFIG_LTQ_PPA_API_SW_FASTPATH)

- if(ppa_hook_sw_fastpath_send_fn!=NULL) {

- if(ppa_hook_sw_fastpath_send_fn(skb) == 0)

- return NET_RX_SUCCESS;

- }

-#endif

-

#ifdef CONFIG_RPS

if (static_key_false(&rps_needed)) {

struct rps_dev_flow voidflow, *rflow = &voidflow;

@@ -3338,6 +3304,10 @@

{

struct softnet_data *sd = &__get_cpu_var(softnet_data);

+#ifdef CONFIG_FUSIV_KERNEL_PROFILER_MODULE

+ int profileResult;

+ profileResult = loggerProfile(net_tx_action);

+#endif

if (sd->completion_queue) {

struct sk_buff *clist;

@@ -3390,6 +3360,10 @@

}

}

}

+#ifdef CONFIG_FUSIV_KERNEL_PROFILER_MODULE

+ if(profileResult == 0)

+ profileResult = loggerProfile(net_tx_action);

+#endif

}

#if (defined(CONFIG_BRIDGE) || defined(CONFIG_BRIDGE_MODULE)) && \

@@ -3427,7 +3401,6 @@

q = rxq->qdisc;

if (q != &noop_qdisc) {

- BUG_ON(!in_softirq());

spin_lock(qdisc_lock(q));

if (likely(!test_bit(__QDISC_STATE_DEACTIVATED, &q->state)))

result = qdisc_enqueue_root(skb, q);

@@ -3437,17 +3410,7 @@

return result;

}

-int check_ingress(struct sk_buff *skb)

-{

- struct netdev_queue *rxq = rcu_dereference(skb->dev->ingress_queue);

-

- if (!rxq || rxq->qdisc == &noop_qdisc)

- return 1;

- return 0;

-}

-EXPORT_SYMBOL(check_ingress);

-

-struct sk_buff *handle_ing(struct sk_buff *skb,

+static inline struct sk_buff *handle_ing(struct sk_buff *skb,

struct packet_type **pt_prev,

int *ret, struct net_device *orig_dev)

{

@@ -3472,10 +3435,25 @@

skb->tc_verd = 0;

return skb;

}

-EXPORT_SYMBOL(handle_ing);

#endif

/**

+ * netdev_is_rx_handler_busy - check if receive handler is registered

+ * @dev: device to check

+ *

+ * Check if a receive handler is already registered for a given device.

+ * Return true if there one.

+ *

+ * The caller must hold the rtnl_mutex.

+ */

+bool netdev_is_rx_handler_busy(struct net_device *dev)

+{

+ ASSERT_RTNL();

+ return dev && rtnl_dereference(dev->rx_handler);

+}

+EXPORT_SYMBOL_GPL(netdev_is_rx_handler_busy);

+

+/**

* netdev_rx_handler_register - register receive handler

* @dev: device to register a handler for

* @rx_handler: receive handler to register

@@ -3620,8 +3598,8 @@

goto drop;

#ifdef CONFIG_AVM_PA

-#ifdef CONFIG_AVM_NET_DEBUG_SKBUFF_LEAK

- skb_track_funccall(skb, avm_pa_dev_receive);

+#ifdef CONFIG_NET_DEBUG_SKBUFF_LEAK

+ skb_track_funccall(skb, avm_pa_dev_receive);

#endif

if (avm_pa_dev_receive(AVM_PA_DEVINFO(skb->dev), skb) == 0) {

ret = NET_RX_SUCCESS;

@@ -3629,7 +3607,6 @@

}

#endif

-#ifdef CONFIG_AVM_RECV_HOOKS

if (avm_early_recvhook && (*avm_early_recvhook)(skb)) {

/*

* paket consumed by hook

@@ -3637,7 +3614,6 @@

ret = NET_RX_SUCCESS;

goto out;

}

-#endif

if (vlan_tx_tag_present(skb)) {

if (pt_prev) {

@@ -3681,15 +3657,13 @@

skb->vlan_tci = 0;

}

-#ifdef CONFIG_AVM_RECV_HOOKS

- if (avm_recvhook && (*avm_recvhook)(skb)) {

+ if (skb && avm_recvhook && (*avm_recvhook)(skb)) {

/*

* paket consumed by hook

*/

ret = NET_RX_SUCCESS;

goto out;

}

-#endif

/* deliver only exact match when indicated */

null_or_dev = deliver_exact ? skb->dev : NULL;

@@ -3770,7 +3744,6 @@

{

int ret;

- BUG_ON(!in_softirq());

BUG_ON(skb->sk);

net_timestamp_check(netdev_tstamp_prequeue, skb);

@@ -4048,7 +4021,9 @@

pinfo->nr_frags &&

!PageHighMem(skb_frag_page(frag0))) {

NAPI_GRO_CB(skb)->frag0 = skb_frag_address(frag0);

- NAPI_GRO_CB(skb)->frag0_len = skb_frag_size(frag0);

+ NAPI_GRO_CB(skb)->frag0_len = min_t(unsigned int,

+ skb_frag_size(frag0),

+ skb->end - skb->tail);

}

}

@@ -4337,6 +4312,10 @@

unsigned long time_limit = jiffies + 2;

int budget = netdev_budget;

void *have;

+#ifdef CONFIG_FUSIV_KERNEL_PROFILER_MODULE

+ int profileResult;

+ profileResult = loggerProfile(net_rx_action);

+#endif

local_irq_disable();

@@ -4417,7 +4396,10 @@

*/

dma_issue_pending_all();

#endif

-

+#ifdef CONFIG_FUSIV_KERNEL_PROFILER_MODULE

+ if(profileResult == 0)

+ profileResult = loggerProfile(net_rx_action);

+#endif

return;

softnet_break:

@@ -4858,8 +4840,7 @@

dev->flags = (flags & (IFF_DEBUG | IFF_NOTRAILERS | IFF_NOARP |

IFF_DYNAMIC | IFF_MULTICAST | IFF_PORTSEL |

- IFF_AUTOMEDIA | IFF_NOMULTIPATH | IFF_MPBACKUP |

- IFF_MPMASTER)) |

+ IFF_AUTOMEDIA)) |

(dev->flags & (IFF_UP | IFF_VOLATILE | IFF_PROMISC |

IFF_ALLMULTI));

@@ -4893,13 +4874,6 @@

dev_set_promiscuity(dev, inc);

}

- /* hack handling for MULTIPATH, interface down and up shouldn't set multipath

- * on only Traffic Steering should do it, this is not clean.

- */

- if ((old_flags ^ flags) & IFF_UP) { /* Bit is different ? */

- dev->flags |= IFF_NOMULTIPATH;

- }

-

/* NOTE: order of synchronization of IFF_PROMISC and IFF_ALLMULTI

is important. Some (broken) drivers set IFF_PROMISC, when

IFF_ALLMULTI is requested not asking us and not reporting.

@@ -5722,8 +5696,9 @@

WARN_ON(rcu_access_pointer(dev->ip6_ptr));

WARN_ON(dev->dn_ptr);

#ifdef CONFIG_AVM_PA

- avm_pa_dev_unregister(AVM_PA_DEVINFO(dev));

+ avm_pa_dev_unregister(AVM_PA_DEVINFO(dev));

#endif

+

if (dev->destructor)

dev->destructor(dev);

@@ -5754,12 +5729,6 @@

}

EXPORT_SYMBOL(netdev_stats_to_stats64);

-#if (defined(CONFIG_LTQ_STAT_HELPER) || defined(CONFIG_LTQ_STAT_HELPER_MODULE))

-struct rtnl_link_stats64* (*dev_get_extended_stats64_fn)(struct net_device *dev,

- struct rtnl_link_stats64 *storage) = NULL;

-EXPORT_SYMBOL(dev_get_extended_stats64_fn);

-#endif

-

/**

* dev_get_stats - get network device statistics

* @dev: device to get statistics from

@@ -5783,10 +5752,6 @@

} else {

netdev_stats_to_stats64(storage, &dev->stats);

}

-#if (defined(CONFIG_LTQ_STAT_HELPER) || defined(CONFIG_LTQ_STAT_HELPER_MODULE))

- if (dev_get_extended_stats64_fn)

- dev_get_extended_stats64_fn(dev, storage);

-#endif

storage->rx_dropped += atomic_long_read(&dev->rx_dropped);

return storage;

}

@@ -5891,7 +5856,7 @@

INIT_LIST_HEAD(&dev->upper_dev_list);

dev->priv_flags = IFF_XMIT_DST_RELEASE;

#ifdef CONFIG_AVM_PA

- avm_pa_dev_init(AVM_PA_DEVINFO(dev));

+ avm_pa_dev_init(AVM_PA_DEVINFO(dev));

#endif

setup(dev);

peh@vidar:~>